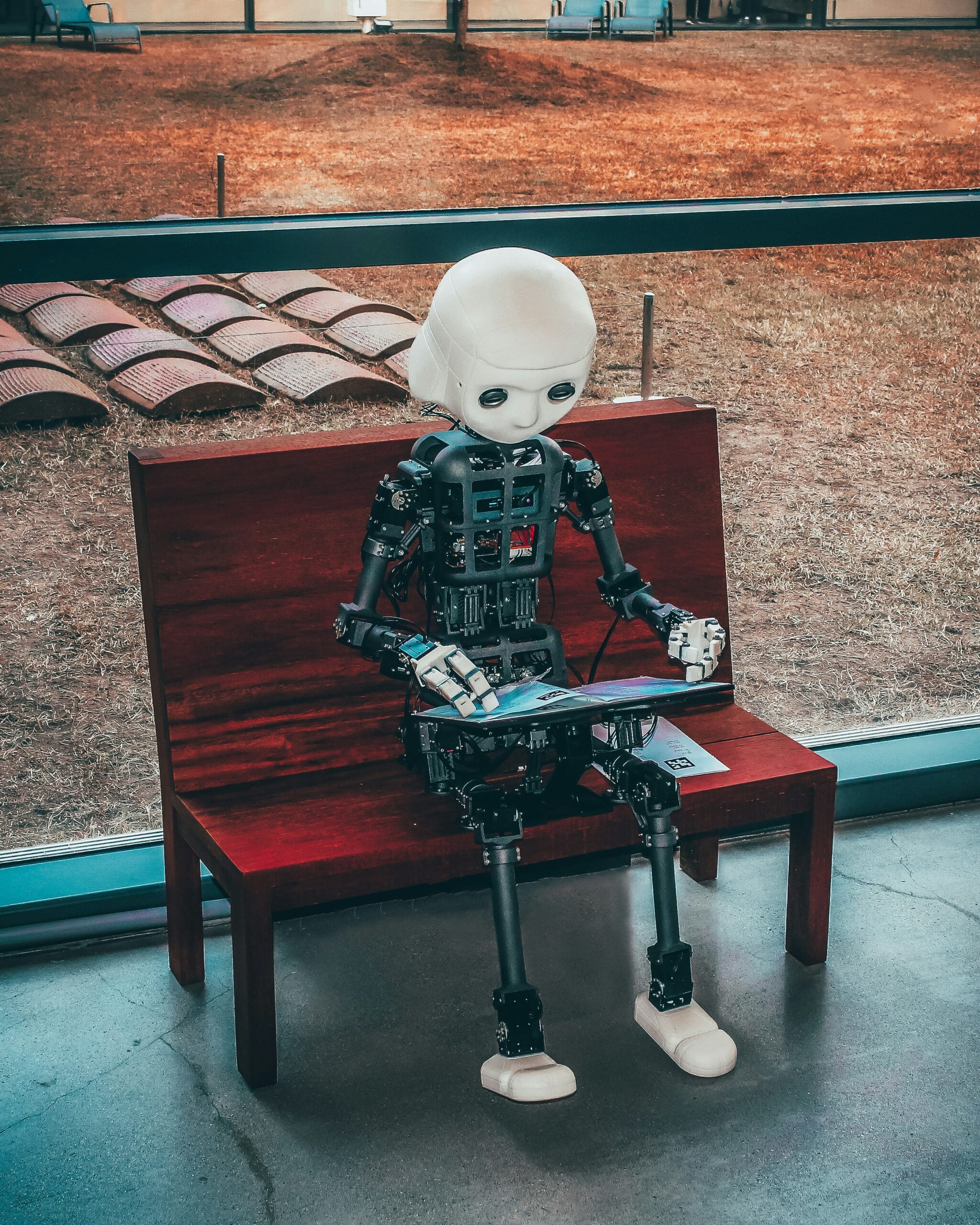

Introduction to AI and Its Growing Influence

Artificial intelligence (AI) has rapidly evolved from a theoretical concept to a tangible force driving significant advancements across numerous sectors. AI technologies, encompassing machine learning, neural networks, and natural language processing, are increasingly integrated into healthcare, finance, transportation, and daily consumer products. In healthcare, AI enhances diagnostic accuracy, predicts patient outcomes, and personalizes treatment plans. The financial sector leverages AI for fraud detection, risk management, and algorithmic trading, while the transportation industry benefits from AI-driven autonomous vehicles and traffic management systems.

The transformative potential of AI is immense, offering benefits such as increased efficiency, cost reduction, and improved decision-making. AI-powered applications in consumer products, like virtual assistants and personalized recommendations, have become indispensable in our daily lives. These advancements not only streamline operations but also open new avenues for innovation and economic growth.

However, as AI continues to permeate various facets of society, it also brings forth significant risks that necessitate careful consideration. The rapid adoption of AI has sparked debates on ethical implications, including concerns about privacy, bias, and the potential displacement of human jobs. AI systems, if not properly regulated, can perpetuate existing inequalities and introduce new forms of discrimination. This underscores the importance of AI ethics, which seeks to balance technological innovation with societal well-being.

To harness the benefits of AI while mitigating its risks, it is crucial to implement comprehensive regulations and policies. These measures should aim to ensure transparency, accountability, and fairness in AI development and deployment. By fostering a regulatory framework that promotes responsible AI practices, society can maximize the positive impact of AI technologies while safeguarding against potential adverse outcomes.

Identifying the Potential Risks of AI

The advent of artificial intelligence (AI) has undoubtedly brought about transformative changes across various sectors. However, alongside these advancements come significant risks that warrant careful consideration. One of the most prominent concerns is job displacement due to automation. As AI systems become more proficient at performing tasks traditionally carried out by humans, there is a growing apprehension that many jobs may become obsolete. This not only poses economic challenges but also raises questions about the future workforce and the need for retraining and upskilling initiatives.

Another critical risk pertains to privacy concerns arising from data collection and surveillance. AI technologies often rely on vast amounts of data to function effectively. This necessitates the collection, storage, and analysis of personal information, which can lead to potential breaches of privacy. For instance, facial recognition systems, while beneficial for security purposes, can be misused for mass surveillance, infringing on individuals’ rights to privacy.

Biases embedded in AI algorithms also present substantial risks. When AI systems are trained on data that contains historical biases, they can perpetuate and even exacerbate these biases, leading to unfair treatment and discrimination. An example of this is seen in hiring algorithms that may inadvertently favor certain demographics over others, thereby reinforcing societal inequalities. Addressing these biases requires a concerted effort to ensure that AI systems are designed and trained with fairness and inclusivity in mind.

The potential for AI to be used maliciously is another area of concern. Technologies such as deepfakes, which create hyper-realistic but fake videos, can be weaponized to spread misinformation or damage reputations. Similarly, AI-driven cyber-attacks can compromise sensitive information and disrupt critical infrastructure. The malicious use of AI underscores the need for robust security measures and ethical guidelines to prevent misuse.

Unintended consequences of AI decision-making further complicate the landscape. Autonomous systems, such as self-driving cars, must navigate complex ethical dilemmas, such as making split-second decisions in life-threatening situations. These scenarios highlight the necessity for clear ethical frameworks to guide the development and deployment of AI technologies.

Real-world examples and case studies illustrate these risks vividly. For instance, the implementation of predictive policing algorithms in law enforcement has raised concerns about racial bias and the potential for unjust targeting of minority communities. Such examples underscore the importance of addressing the inherent risks of AI to ensure that its benefits are realized without compromising ethical standards and societal values.

The Necessity of AI Regulations and Policies

The rapid advancement of artificial intelligence (AI) technology has introduced numerous benefits and conveniences to modern society. However, these advancements also carry inherent risks that necessitate the establishment of comprehensive regulations and policies. Governments, international bodies, and industry leaders play a crucial role in creating robust frameworks to ensure that AI is developed and deployed responsibly.

One of the primary areas of concern is the transparency of AI systems. As AI becomes increasingly integrated into various sectors, from healthcare to finance, it is essential that these systems operate in a transparent manner. Transparency allows for the auditing and understanding of AI decision-making processes, which is vital for maintaining public trust and ensuring accountability. Without transparency, AI systems can perpetuate biases and make unfounded decisions, leading to unfair outcomes.

In addition to transparency, accountability in AI systems is imperative. Developers and operators of AI technologies must be held accountable for the outcomes produced by their systems. This includes ensuring that AI systems do not violate legal or ethical standards. Establishing clear lines of accountability helps in addressing issues when they arise and deters the misuse of AI technologies.

Protecting individual privacy and data security is another critical aspect of AI regulation. AI systems often rely on vast amounts of personal data to function effectively. Therefore, stringent measures must be in place to safeguard this data from breaches and misuse. Policies that enforce data minimization, consent-based data collection, and secure storage are essential in protecting individuals’ privacy.

Furthermore, implementing ethical guidelines is crucial to the responsible development and deployment of AI. Ethical guidelines provide a moral compass for AI development, ensuring that these technologies benefit society as a whole without causing harm. These guidelines should address issues such as bias, fairness, and the societal impact of AI technologies.

Several regions and industries have already taken steps toward establishing AI regulations and best practices. For instance, the European Union’s General Data Protection Regulation (GDPR) sets a high standard for data protection and privacy. Similarly, various industry-led initiatives focus on creating ethical AI frameworks. These efforts serve as valuable examples for other regions and industries aiming to develop responsible AI regulations.

Strategies for Effective AI Governance

Effective AI governance necessitates a multifaceted approach, integrating diverse perspectives from various stakeholders. A critical component in crafting comprehensive AI policies is stakeholder collaboration. This involves engaging technologists, lawmakers, ethicists, social scientists, and industry leaders to ensure that policies are well-rounded and considerate of all potential impacts. By fostering an inclusive dialogue, stakeholders can better anticipate the implications of AI technologies and devise strategies that mitigate risks while maximizing benefits.

Public engagement is another cornerstone of effective AI governance. Transparent communication with the public about the benefits and risks associated with AI technologies helps build trust and ensures that societal values and concerns are reflected in regulatory frameworks. Mechanisms such as public consultations, forums, and surveys can be instrumental in gauging public sentiment and incorporating it into policy-making processes.

Continuous monitoring and evaluation of AI systems are essential to adapt to the rapid pace of technological advancements. Establishing robust oversight mechanisms can help identify and address issues as they arise, ensuring that AI systems operate within ethical and legal boundaries. Regular audits, impact assessments, and adaptive regulatory frameworks are crucial tools in this ongoing process.

Interdisciplinary approaches are paramount for effective AI governance. Combining insights from technology, law, ethics, and social sciences enables a holistic understanding of AI’s implications. This interdisciplinary collaboration can lead to the development of balanced policies that address technical feasibility, legal compliance, ethical considerations, and social impact.

Global cooperation is imperative given the borderless nature of AI technologies. International collaboration can help harmonize regulations, set global standards, and ensure that AI advancements benefit all of humanity. Forums such as the United Nations, the World Economic Forum, and other global bodies can play pivotal roles in fostering such cooperation.

In conclusion, stakeholders must prioritize responsible AI development. By adopting comprehensive governance strategies, engaging in interdisciplinary collaboration, and fostering global cooperation, we can navigate the complexities of AI and harness its potential for the greater good.